At CleverMaps we heavily rely on the cadastre of real estate, which is probably the biggest data source in my country. Using their excellent knowledge of this data set, my teammates often supply me with all kinds of weird challenges.

Give me the biggest land users nearby

Find the biggest land users in surrounding cadastral communities for each cadastral community (~ 13K) being the latest task, here’s the query I tackled it with:

WITH users_ AS (

SELECT

cad_code,

id,

zipcode,

city,

concat_ws(' ',street, concat_ws('/', house_number, street_number)) as street,

name,

'Users with more than 10 ha'::text note,

SUM(acreage) area

FROM land_blocks -- being a table with info about all the agricultural land blocks

JOIN users u ON id_uz = id

GROUP BY cad_code, u.id

HAVING SUM(acreage) > 10

),

ints AS (

SELECT

ku.cad_code as community,

ku2.cad_code as surrounding,

ku2.cad_name

FROM cadastral_community ku

JOIN cadastral_community ku2

ON ST_Intersects(ku.geom, ku2.geom)

WHERE ku.cad_code <> ku2.cad_code

)

SELECT

DISTINCT ON (surrounding, cad_name, u.zipcode, u.city, u.street, u.name)

surrounding,

cad_name,

u.zipcode,

u.city,

u.street,

u.name,

u.note,

u.area

FROM

users_ u

JOIN ints

ON cad_code = community;

Few things to note:

concat_ws() is a great function for joining values that might be NULL. If such a value is found, it is skipped and the function continues with the next one (if any). Thus, you’ll never get a string ending with a trailing slash (Street name 55/).- With

users_ CTE I get a list of owners having more than 10 hectares of land for each cadastral community. This gives me the inverse result of what I want (if I know the biggest owners in the cadastral community, I know these are the ones that should be listed for surrounding c. communities).

- This putting-it-all-together step is done with

ints CTE that outputs the list of surrounding c. communities for each of them.

DISTINCT ON cleans up the list so the same owners don’t appear more than once for any given c. community.

Writing this makes me realize the list should be probably sorted by area so only the occurence with the biggest value is kept for each c. community. Simple ORDER BY should deal with this just fine. Or even more sophisticated, using GROUP BY to output the total acreage in all surrounding c. communities.

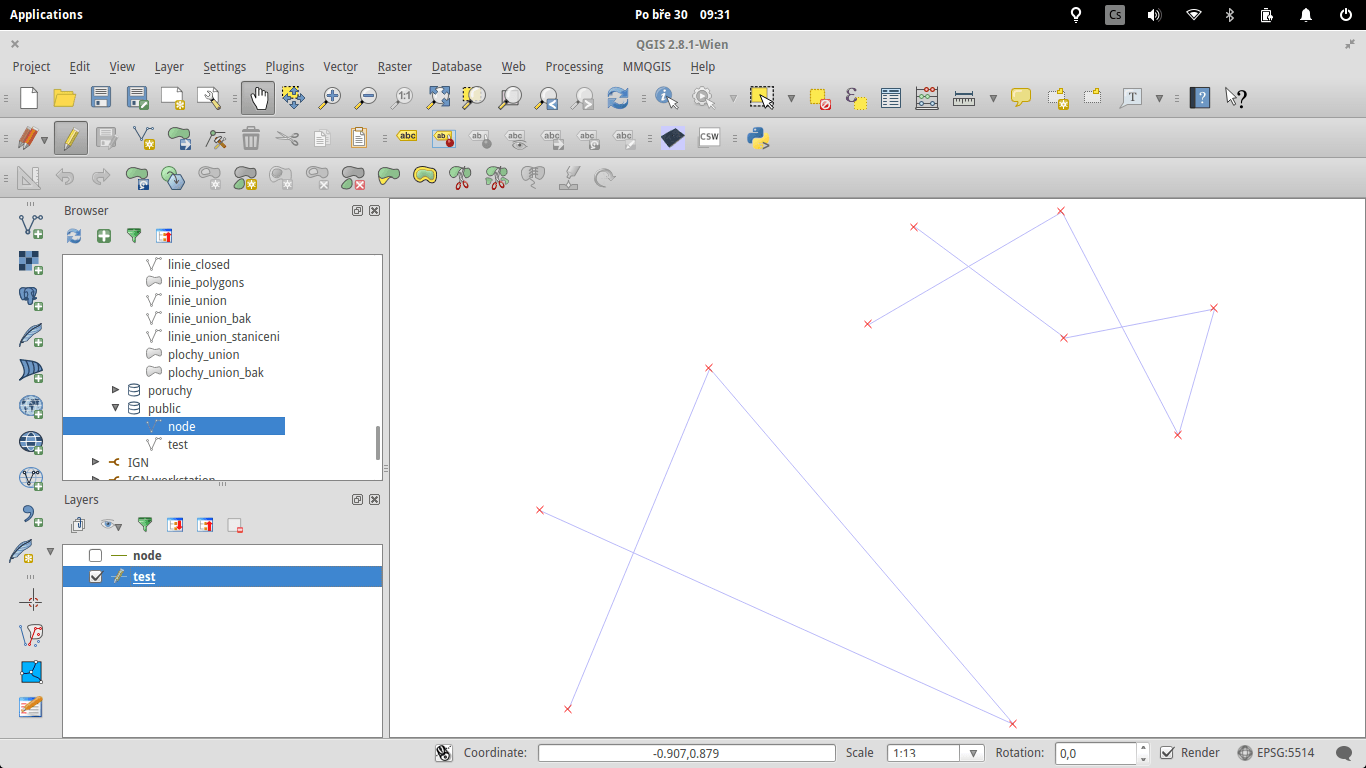

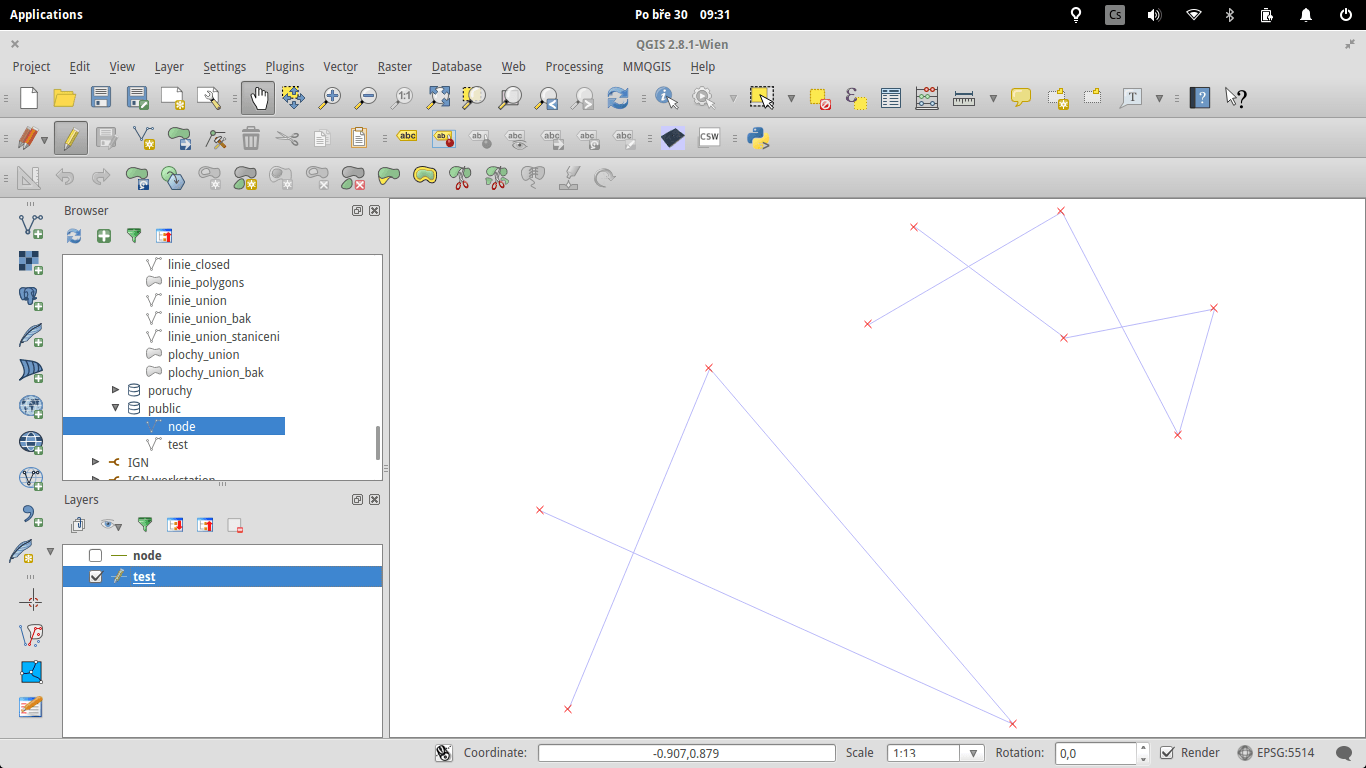

Is there a way of using PostgreSQL + PostGIS for finding the number of self intersections in a linestring? was a question that made me think of this problem. I came up with a solution that takes just a few lines of code.

Assume the following geometries:

CREATE TABLE test2 (

id integer NOT NULL,

wkb_geometry geometry(LineString,5514)

);

COPY test2 (id, wkb_geometry) FROM stdin;

1 01020000208A15000004000000CCDC7845E339EEBFF2003B4A8A08E1BFE4154DAB7C31DCBF24C2042773E3E53F2287BA2CC591E43F604749BFE3B2E2BF2AE9770A11B8F0BF9C91435D56C0C63F

2 01020000208A1500000600000050212BF9E63EC03F1FA046FD69F1EA3F504D44212915EA3F74A99EDF44E3F33F2CE2805DFAB1F33F805D24B1B189DC3F9834DE5938C1F53FB56F1FBF8AAFEC3F24D0C85B4666EA3FF311B0D8D75BE93F306EAA073894D23FA841B27E3404F33F

\.

Note that those geometries are valid while not being simple, thus, ST_IsValidReason() wouldn’t help much. What if we compared it to their single counterparts? Those would have had vertices at intersections. Once you know the original number of vertices and the number of simple geometry vertices, it is fairly easy to subtract those two.

WITH noded AS (

SELECT id, COUNT(id)

FROM (

SELECT DISTINCT (ST_DumpPoints(ST_Node(wkb_geometry))).geom, id

FROM test

) tmp group by id

),

test AS (

SELECT id, COUNT(id)

FROM (

SELECT DISTINCT (ST_DumpPoints(wkb_geometry)).geom, id

FROM test

) tmp group by id

)

SELECT noded.id, noded.count - test.count cnt FROM noded JOIN test USING (id);

This query gives you geometry id and the difference in number of vertices between the original and simple geometry. Note the DISTINCT in the noded CTE - with ST_Node() you get one vertex x number of intersecting lines for each intersection. DISTINCT gives you just one of them.

The query result on my test table:

Creating a rectangular grid to cover a given extent with same sized cells is one of the basic GIS tasks I’ve had to solve several times so far. I used QGIS or some Python to give me a bunch of INSERT statements to run in PostGIS database, now I’ve come with a final solution at last.

CREATE OR REPLACE FUNCTION cm_grid(

blx float8, -- bottom left x coordinate

bly float8, -- bottom left y coordinate

trx float8, -- top right x coordinate

try float8, -- top right y coordinate

xsize float8, -- cell width

ysize float8, -- cell height

srid integer DEFAULT 5514,

OUT col varchar,

OUT "row" varchar,

OUT geom geometry

) RETURNS SETOF record AS

$$

DECLARE

width float8; -- total area width

height float8; -- total area height

cols integer;

rows integer;

BEGIN

width := @($1 - $3); -- absolute value

height := @($2 - $4); -- absolute value

cols := ceil(width / xsize);

rows := ceil(height / ysize);

RETURN QUERY

SELECT

cast(

lpad((i)::varchar,

CASE WHEN

char_length(rows::varchar) > char_length(cols::varchar)

THEN char_length(rows::varchar)

ELSE char_length(cols::varchar)

END,

'0')

AS varchar

) AS row,

cast(

lpad((j)::varchar,

CASE WHEN

char_length(rows::varchar) > char_length(cols::varchar)

THEN char_length(rows::varchar)

ELSE char_length(cols::varchar)

END,

'0') AS varchar

) AS col,

ST_SetSRID(

ST_GeomFromText(

'POLYGON((' ||

array_to_string(

ARRAY[i * xsize + blx, j * ysize + bly],

' '

) || ',' ||

array_to_string(

ARRAY[i * xsize + blx, (j+1) * ysize + bly],

' '

) || ',' ||

array_to_string(

ARRAY[(i+1) * xsize + blx, (j+1) * ysize + bly],

' '

) || ',' ||

array_to_string(

ARRAY[(i+1) * xsize + blx, j * ysize + bly],

' '

) || ',' ||

array_to_string(

ARRAY[i * xsize + blx, j * ysize + bly],

' '

) || '

))'

)

, srid) AS geom

FROM

generate_series(0, cols) AS i,

generate_series(0, rows) AS j;

END;

$$

LANGUAGE plpgsql;

And you call it like this:

CREATE TABLE grid AS

SELECT *

FROM cm_grid(-675593.69, -1057711.19, -672254.69, -1054849.19, 333.47, 333.47);

Few notes:

- it takes bounding box coordinates (bottom left, top right) for an extent,

- followed by cell width and height,

- and optional SRID (defaults to 5514 which is Czech national grid),

- each cell is indexed with

row and col number

The messy CASE statement makes sure both row and col are of the same length. I used array_to_string for better readability. It might not be the fastest way, didn’t do any benchmarks.

Being part of CleverMaps means doing lot of nasty work with PostGIS. Recently, I’ve been given a following task that needed to be done for a really big project dealing with agricultural parcels:

- given a polygonal shapefile of agricultural parcels, create 20m wide buffers around all of them,

- extract holes from these parcels,

- clip buffers so they don’t overlap with other parcels,

- get rid of overlaps between nearby parcels (e.g. dissolve them),

- create output combined from holes and buffers,

- the output must not contain features having more than ~1,000,000 vertices

This process is going to be run ~20× on layers with ~40,000-70,000 polygons.

Input data

- polygonal layer of agricultural parcels

- rectangular grid (7.5 × 7.5 km) for cutting the output

First try

My initial effort was to union all the buffers and then clip them with a rectangular grid. Long story short: Don’t do that. Never. Ever. I mean it.

It works fine until you end up with one huge multipolygon having like ~2,000,000 vertices. But then you need to split it somehow so you meet the 1,000,000 limit rule (see list above). Spatial index doesn’t help you much in such cases, so that really huge polygon is being cut by every rectangle it intersects and it takes hours and hours. It’s just a no go.

The other way round

Let’s put it the other way round. First, split buffers by rectangular grid, doing union on each cell separately.

Import

Using the swiss knife of GIS to import the data:

export SHAPE_ENCODING="ISO-8859-1"

ogr2ogr -f PostgreSQL PG:"dbname=db user=postgres" parcels.shp -lco ENCODING=UTF-8 -t_srs "EPSG:2154"

ogr2ogr -f PostgreSQL PG:"dbname=db user=postgres" grid.shp -lco ENCODING=UTF-8 -t_srs "EPSG:2154"

PostGIS processing

Recently I stumbled upon a psql \set command. Launching several queries on the same table, it might be useful to define it’s name with \set table tablename:

\set table 'parcels'

-- prepare separate table for holes (inner rings)

DROP TABLE IF EXISTS holes;

CREATE TABLE holes (

id serial,

ilot_id varchar,

wkb_geometry geometry('Polygon', 2154),

path integer);

I found the following query an easy way to get all the rings from geometries having more than one ring:

INSERT INTO holes (ilot_id, wkb_geometry, path) (

SELECT id,

(ST_DumpRings(wkb_geometry)).geom::geometry('Polygon', 2154) as wkb_geometry,

unnest((ST_DumpRings(wkb_geometry)).path) as path

FROM :table

WHERE ST_NRings(wkb_geometry) > 1

);

Here’s a little trick. Doing some checks I found out that some of the polygons had two rings without having any inner ring, both of them being the same. I guess this comes from some kind of human error. This query thus deletes all rings with path = 0 (exterior rings). At the same time, it deals with that invalid polygons by checking their spatial relationship to parcels.

DELETE FROM holes

WHERE path = 0

OR id IN (

SELECT holes.id

FROM holes

JOIN :table ON

ST_Within(

ST_Buffer(holes.wkb_geometry,-1),

:table.wkb_geometry

)

AND holes.wkb_geometry && :table.wkb_geometry

);

To my surprise, it is possible that parcel has a hole with another parcel being in that hole. Argh. Find those and get rid of them.

DROP TABLE IF EXISTS ints;

CREATE TABLE ints AS

SELECT holes.*

FROM holes

JOIN :table ON ST_Intersects(holes.wkb_geometry, :table.wkb_geometry)

AND ST_Relate(holes.wkb_geometry, :table.wkb_geometry, '2********');

DELETE FROM holes

WHERE id IN (SELECT id FROM ints);

I still need to get the difference between the hole geometry and the parcel that resides inside it - this difference is the actual hole I’m looking for.

DROP TABLE IF EXISTS diff_ints;

CREATE TABLE diff_ints AS

SELECT

ints.id,

ST_Difference(

ints.wkb_geometry,

ST_Collect(:table.wkb_geometry)

) wkb_geometry

FROM ints, :table

WHERE ST_Within(:table.wkb_geometry, ints.wkb_geometry)

GROUP BY ints.wkb_geometry, ints.id;

And I’m done with holes. Get back to buffers.

DROP TABLE IF EXISTS buffer;

CREATE TABLE buffer AS

SELECT id, ST_Buffer(wkb_geometry, 20) wkb_geometry

FROM :table;

CREATE INDEX buffer_gist_idx ON buffer USING gist(wkb_geometry);

ALTER TABLE buffer ADD PRIMARY KEY(id);

VACUUM ANALYZE buffer;

Combine all the parts together.

DROP TABLE IF EXISTS diff;

CREATE TABLE diff AS

SELECT a.id, ST_Difference(a.wkb_geometry, ST_Union(ST_MakeValid(b.wkb_geometry))) as wkb_geometry

FROM buffer a, :table b

WHERE ST_Intersects(a.wkb_geometry, b.wkb_geometry)

GROUP BY a.id, a.wkb_geometry

UNION

SELECT id::varchar, wkb_geometry FROM holes

UNION

SELECT id::varchar, wkb_geometry FROM diff_ints;

CREATE INDEX diff_gist_idx ON diff USING gist(wkb_geometry);

VACUUM ANALYZE diff;

Collect the geometries in every cell, simplify them a little, snap them to 3 decimal numbers, make them valid and dump them to simple features. This query takes ~300,000 ms which is orders of magnitude faster than my initial attempt.

DROP TABLE IF EXISTS uni;

CREATE TABLE uni AS

SELECT

g.ogc_fid AS grid_id,

(ST_Dump(

ST_MakeValid(

ST_SnapToGrid(

ST_SimplifyPreserveTopology(

ST_CollectionExtract(

ST_Buffer(

ST_Collect(

ST_Intersection(a.wkb_geometry, g.wkb_geometry)

)

, 0)

, 3)

, 0.1)

, 0.001)

)

)).geom as wkb_geometry

FROM diff a, grid g

WHERE ST_Intersects(a.wkb_geometry, g.wkb_geometry)

GROUP BY g.ogc_fid;

After running the query it is reasonable to check the results. I’m only interested in polygonal geometries, ST_GeometryType() would tell me of any other geometry type. Invalid geometries are not allowed.

SELECT DISTINCT ST_GeometryType(wkb_geometry) FROM uni;

SELECT COUNT(1) FROM uni WHERE NOT ST_IsValid(wkb_geometry);

Add primary key on serial column as a last SQL step.

ALTER TABLE uni ADD COLUMN id serial;

ALTER TABLE uni ADD PRIMARY KEY(id);

Export

And spit it out as a shapefile.

ogr2ogr -f "ESRI Shapefile" output.shp PG:"dbname=ign user=postgres" uni -s_srs "EPSG:2154" -t_srs "EPSG:2154" -lco ENCODING=UTF-8

Lesson learned

- More of little seems to be faster than less of bigger.

- Never stop learning and trying different approaches.

- Although using

CTE might be tempting, creating separate tables for separate steps of the whole process is much more comfortable for debugging.

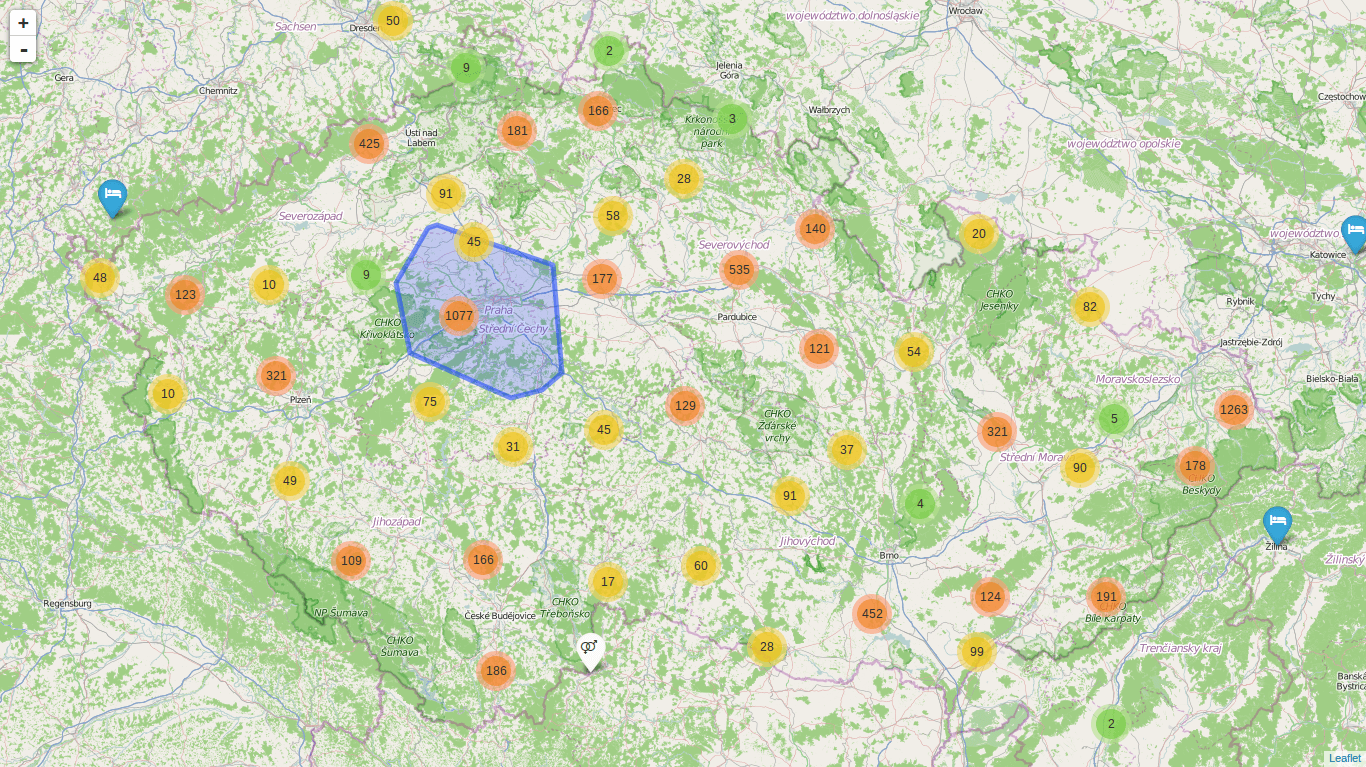

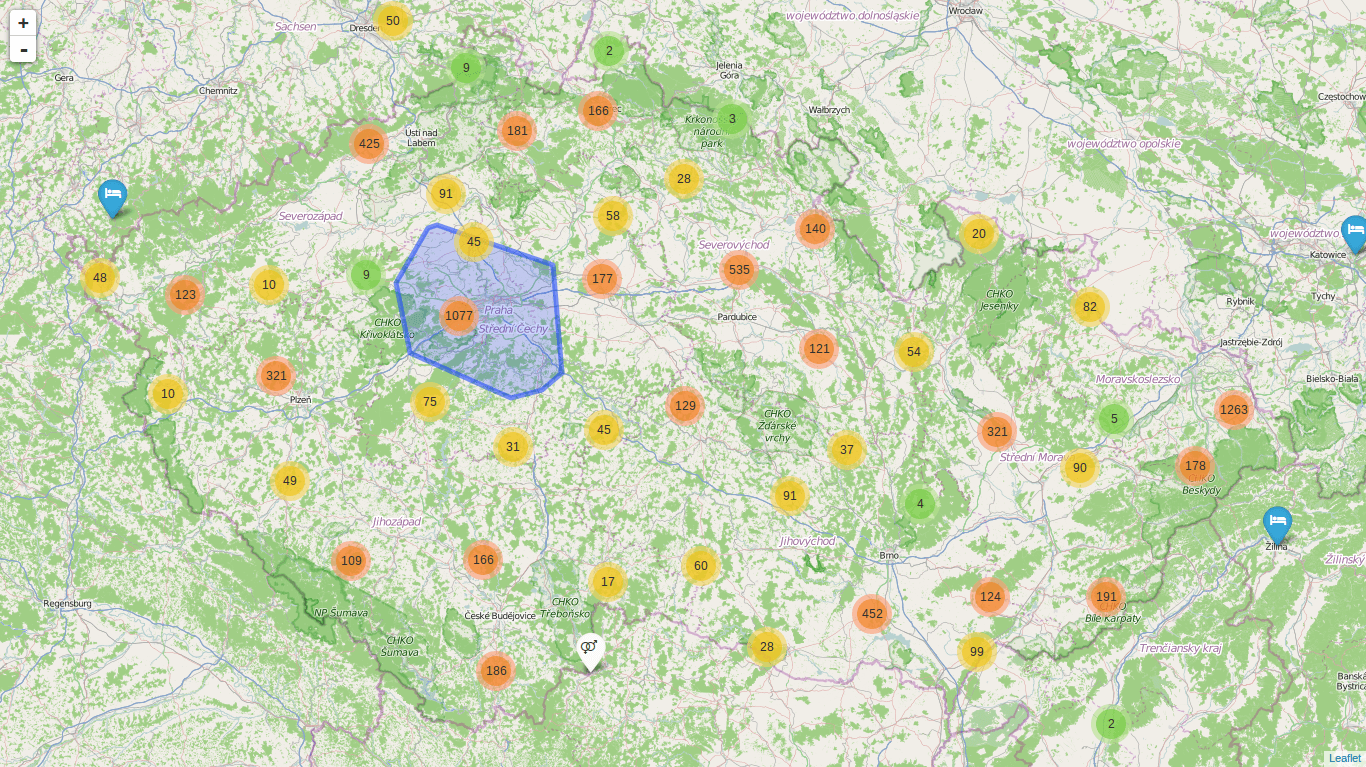

In the first part of my little case study I downloaded vozejkmap.cz dataset and imported it into the PostGIS database. Having spatial data safely stored the time comes to get it onto the map. Libraries used are:

I teach cartography visualization classes this semester and this map should serve well as an example of what can be done with online maps.

Retrieving data from the PostGIS database

Our goal is to build the whole map as a static HTML page without any backend logic. Thus, data needs to be extracted from the database into the format readable with Leaflet - GeoJSON.

That’s fairly easy with the postgresonline.com tutorial. It took me quite a time to find out what the following query does. Splitting it into smaller chunks helped a lot.

SELECT row_to_json(fc)

FROM (

SELECT 'FeatureCollection' AS type,

array_to_json(array_agg(f)) AS features

FROM (SELECT 'Feature' AS type,

ST_AsGeoJSON(lg.geom)::json As geometry,

row_to_json((SELECT l FROM (SELECT id, title, location_type, description, author_name, attr1, attr2, attr3) AS l

)) AS properties

FROM vozejkmap AS lg ) AS f ) AS fc \g /path/to/file.json;

To get all rows with type, geometry and properties columns (these are the ones defined in GeoJSON specification, see the link above), run this:

SELECT 'Feature' AS type,

ST_AsGeoJSON(lg.geom)::json As geometry,

row_to_json((SELECT l FROM (SELECT id, title, location_type, description, author_name, attr1, attr2, attr3) AS l

)) AS properties

FROM vozejkmap AS lg

array_agg() squashes all the rows into an array while array_to_json() returns the array as JSON.

SELECT 'FeatureCollection' AS type,

array_to_json(array_agg(f)) AS features

FROM (SELECT 'Feature' AS type,

ST_AsGeoJSON(lg.geom)::json As geometry,

row_to_json((SELECT l FROM (SELECT id, title, location_type, description, author_name, attr1, attr2, attr3) AS l

)) AS properties

FROM vozejkmap AS lg ) AS f

In the last step (the whole code as shown above) row_to_json returns the result as JSON.

Caveats

If you run this code from the psql console, be sure you

- set show only row to true with

\t

- set expanded output to false with

\x off

If you don’t, you’ll have lots of hyphens and column names saved to the json file.

Leaflet map

Map JavaScript is rather simple with ~30 lines of code (not taking styles into account). Thanks to the great plugins it is easy to show ~7,600 points on the map real quick.

I didn’t do much customization apart from styling markers and binding popups.

What’s next

- Turf which means I need to think of what could be fun to do with this data

- Layers switching

- Map key (by extending L.Control)

The code is still available at my GitHub.