Last time I described the setup of my OpenShift Twitter crawler and let it running and downloading data. It’s been more than two months since I started and I got interesting amount of data. I also made a simple ETL process to load it into my local PostGIS database, which I’d like to cover in this post.

Extract data

Each day is written to the separate sqlite file with a name like tw_day_D_M_YYYY. Bash is used to gzip all the files before downloading them from OpenShift.

#!/bin/bash

ssh openshift << EOF

cd app-root/data

tar czf twitter.tar.gz *.db

EOF

scp openshift:/var/lib/openshift/55e487587628e1280b0000a9/app-root/data/twitter.tar.gz ./data

cd data &&

tar -xzf twitter.tar.gz &&

cd -

echo "Extract done"

Transform data

The transformation part operates on downloaded files and merges them into one big CSV file. That’s pretty straightforward. Note that’s quite simple with sqlite flags, some sed and tail commands.

#!/bin/bash

rm -rf ./data/csv

mkdir ./data/csv

for db in ./data/*.db; do

FILENAME=$(basename $db)

DBNAME=${FILENAME%%.db}

CSVNAME=$DBNAME.csv

echo "$DBNAME to csv..."

sqlite3 -header -csv $db "select * from $DBNAME;" > ./data/csv/$CSVNAME

done

cd ./data/csv

touch tweets.csv

echo $(sed -n 1p $(ls -d -1 *.csv | head -n 1)) > tweets.csv # get column names

for csv in tw_*.csv; do

echo $csv

tail -n +2 $csv >> tweets.csv # get all lines without the first one

done

Load data

In the last step, the data is loaded with SQL \copy command.

#!/bin/bash

export PG_USE_COPY=YES

DATABASE=mzi_dizertace

SCHEMA=dizertace

TABLE=tweets

psql $DATABASE << EOF

DROP TABLE IF EXISTS $SCHEMA.$TABLE;

CREATE UNLOGGED TABLE $SCHEMA.$TABLE (id text, author text, author_id text, tweet text, created_at text, lon float, lat float, lang text);

\copy $SCHEMA.$TABLE FROM 'data/csv/tweets.csv' CSV HEADER DELIMITER ','

ALTER TABLE $SCHEMA.$TABLE ADD COLUMN wkb_geometry geometry(POINT, 4326);

UPDATE $SCHEMA.$TABLE SET wkb_geometry = ST_SetSRID(ST_MakePoint(lon, lat), 4326);

CREATE INDEX ${TABLE}_geom_idx ON $SCHEMA.$TABLE USING gist(wkb_geometry);

COMMIT;

EOF

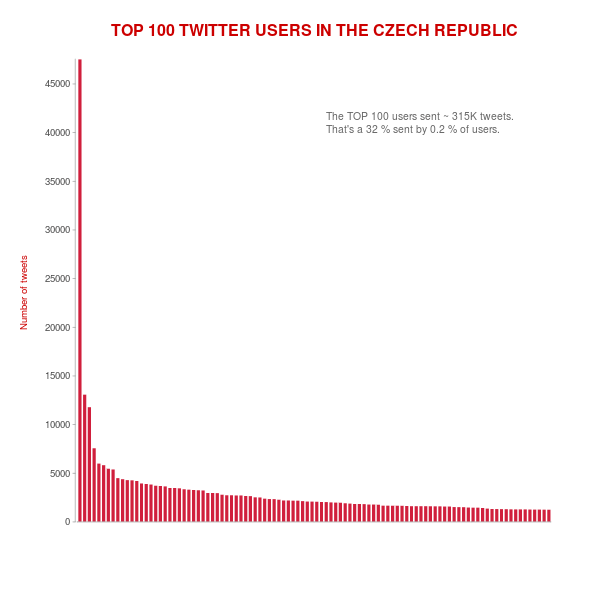

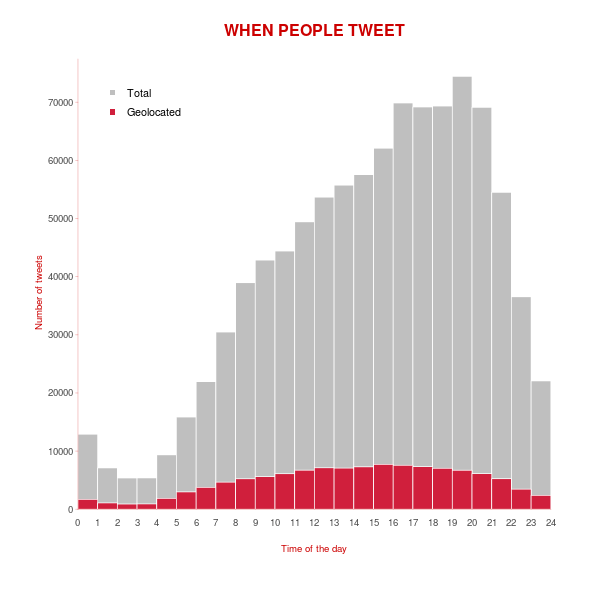

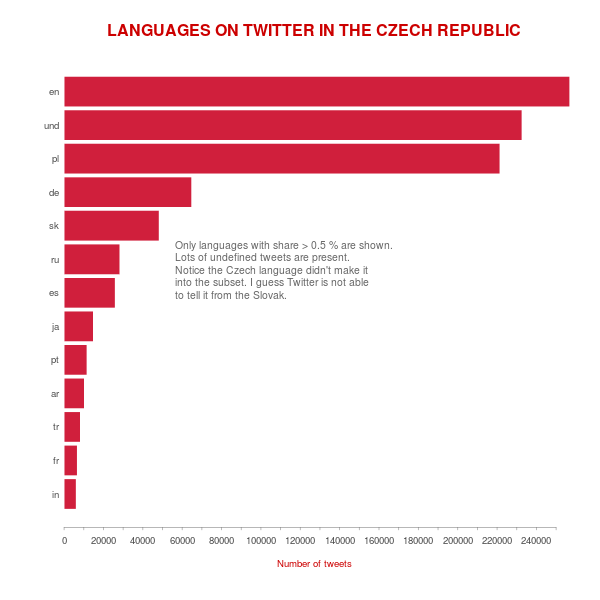

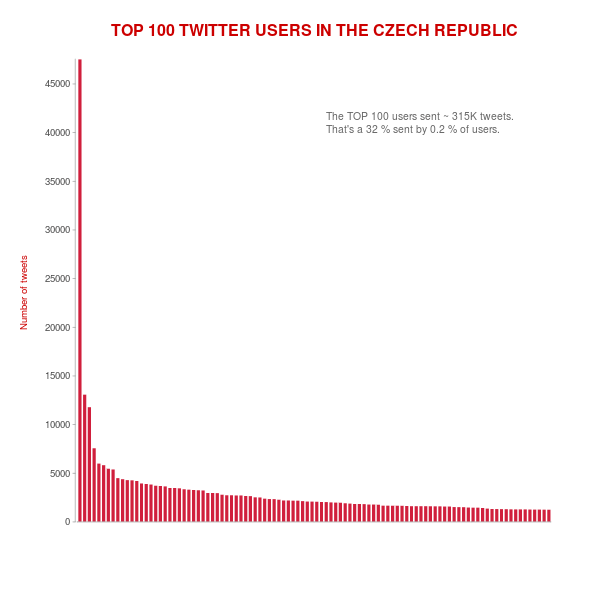

First statistics

Some interesting charts and numbers follow.

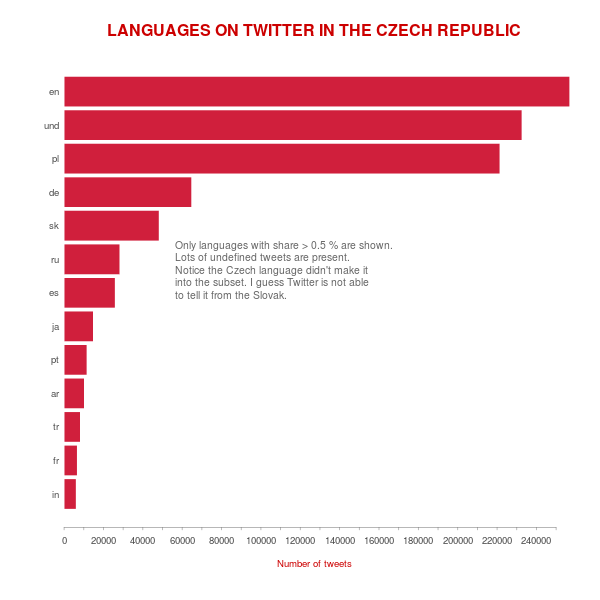

More than a year ago I wrote about analyzing Twitter languages with Streaming API. Back then I kept my laptop running for a week to download data. Not a comfortable way, especially if you decide to get more data. One year uptime doesn’t sound like anything you want to be part of. OpenShift by Red Hat seems to be almost perfect replacement. Almost.

OpenShift setup

I started with Node.js application running on one small gear. Once running, you can easily git push the code to your OpenShift repo and login via SSH. I quickly found simple copy-pasting my local solution wasn’t going to work. and fixed it with some minor tweaks. That’s where the fun begins…

I based the downloader on Node.js a year ago. Until now I still don’t get how that piece of software works. Frankly, I don’t really care as long as it works.

Pitfalls

If your application doesn’t generate any traffic, OpenShift turns it off. It wakes up once someone visits again. I had no idea about that and spent some time trying to stop that behavior. Obviously, I could have scheduled a cron job on my laptop pinging it every now and then. Luckily, OpenShift can run cron jobs itself. All you need is to embed a cron cartridge into the running application (and install a bunch of ruby dependencies beforehand).

rhc cartridge add cron-1.4 -a app-name

Then create .openshift/cron/{hourly,daily,weekly,monthly} folder in the git repository and put your script running a simple curl command into one of those.

curl http://social-zimmi.rhcloud.com > /dev/null

Another problem was just around the corner. Once in a while, the app stopped writing data to the database without saying a word. What helped was restarting it - the only automatic way to do so being a git push command. Sadly, I haven’t found a way to restart the app from within itself; it probably can’t be done.

When you git push, the gear stops, builds, deploys and restarts the app. By using hot deployment you can minimize the downtime. Just put the hot_deploy file into .openshift/markers folder.

git commit --allow-empty -m "Restart gear" && git push

This solved the problem until I realize that every restart deleted all the data collected so far. If your data are to stay safe and sound, save them in process.env.OPENSHIFT_DATA_DIR (which is app-root/data).

Anacron to the rescue

How do you push an empty commit once a day? With cron of course. Even better, anacron.

mkdir ~/.anacron

cd ~/.anacron

mkdir cron.daily cron.weekly cron.monthly spool etc

cat <<EOT > ~/.anacron/etc/anacrontab

SHELL=/bin/sh

PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/$HOME/bin

HOME=$HOME

LOGNAME=$USER

1 5 daily-cron nice run-parts --report $HOME/.anacron/cron.daily

7 10 weekly-cron nice run-parts --report $HOME/.anacron/cron.weekly

@monthly 15 monthly-cron nice run-parts --report $HOME/.anacron/cron.monthly

EOT

cat <<EOT >> ~/.zprofile # I use zsh shell

rm -f $HOME/.anacron/anacron.log

/usr/sbin/anacron -t /home/zimmi/.anacron/etc/anacrontab -S /home/zimmi/.anacron/spool &> /home/zimmi/.anacron/anacron.log

EOT

Anacron is to laptop what cron is to 24/7 running server. It just runs automatic jobs when the laptop is running. If it’s not and the job should be run, it runs it once the OS boots. Brilliant idea.

It runs the following code for me to keep the app writing data to the database.

#!/bin/bash

workdir='/home/zimmi/documents/zimmi/dizertace/social'

logfile=$workdir/restart-gear.log

date > $logfile

{

HOME=/home/zimmi

cd $workdir && \

git merge origin/master && \

git commit --allow-empty -m "Restart gear" && \

git push && \

echo "Success" ;

} >> $logfile 2>&1

UPDATE: Spent a long time debugging the “Permission denied (publickey).”-like errors. What seems to help is:

- Use id_rsa instead of any other SSH key

- Put a new entry into the

~/.ssh/config file

I don’t know which one did the magic though.

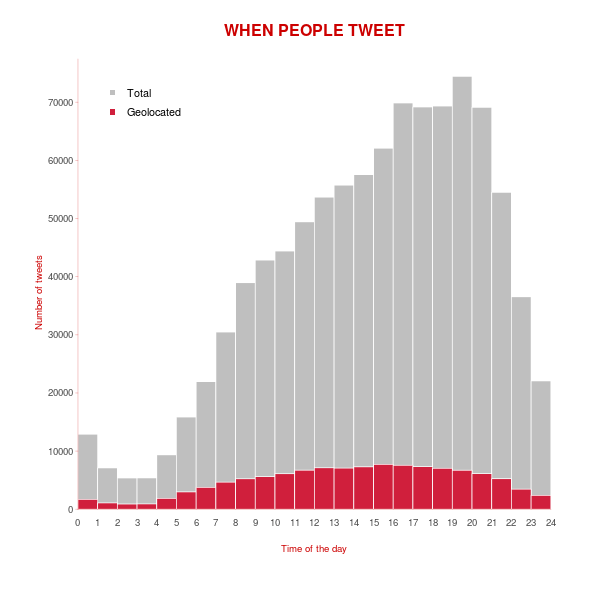

I’ve been harvesting Twitter for a month with about 10-15K tweets a day (only interested in the Czech Republic).

1⁄6 to 1⁄5 of them is located with latitude and longitude. More on this next time.

I decided to migrate my web to OpenShift. It was a bit frustrating but I got it working eventually.

Things to know before taking the leap

Some domain providers don’t support CNAME changes for root domains (zimmi.cz in my case). This means you can’t simply tell your domain to serve content from OpenShift address. But what you can do is to tell your www subdomain to do so:

www.zimmi.cz CNAME hp-zimmi.rhcloud.com

Which is great until you realize you’ve just created two different websites. That’s where wwwizer lends you a hand and lets you redirect your naked domain to your www domain:

zimmi.cz A 174.129.25.170

Now everything works fine and you have your www.domain.tld up and running.

OpenShift subdomains

I wasn’t successful creating a subdomain on the same application where I run my domain. This can be easily solved by creating another application and pointing DNS to it:

posts.zimmi.cz A 174.179.25.170

www.posts.zimmi.cz CNAME posts-zimmi.rhcloud.com

Just don’t forget to handle both naked and www version. When Google reindexes new URLs (http://www.zimmi.cz/posts instead of http://posts.zimmi.cz) subdomain application might be deleted.