I’ve been sort of living in Brno for the last 7 years (college included). It’s quite a hilly city, with lots of cars, very good public transportation system and ever-improving cycling infrastructure. All these years I was using trams, buses and trolleybuses to get myself from one place to another.

These are all great, because:

- you can read while you ride

- you are generally faster than car during rush hour

- you don’t waste your time trying to park your car

These all suck, because:

- public transportation is quite expensive in Brno

- people stink in summer

- some people stink in winter as well

- you usually have to change several lines to actually get where you want

- they’re crowded

My period card expired on March 8 and I decided not to renew it. Why? See the list above. As I don’t have a car and I work at the far end of the city, I can either ride a bike or run to work. Ask me how it’s been for the first month? Not bad at all.

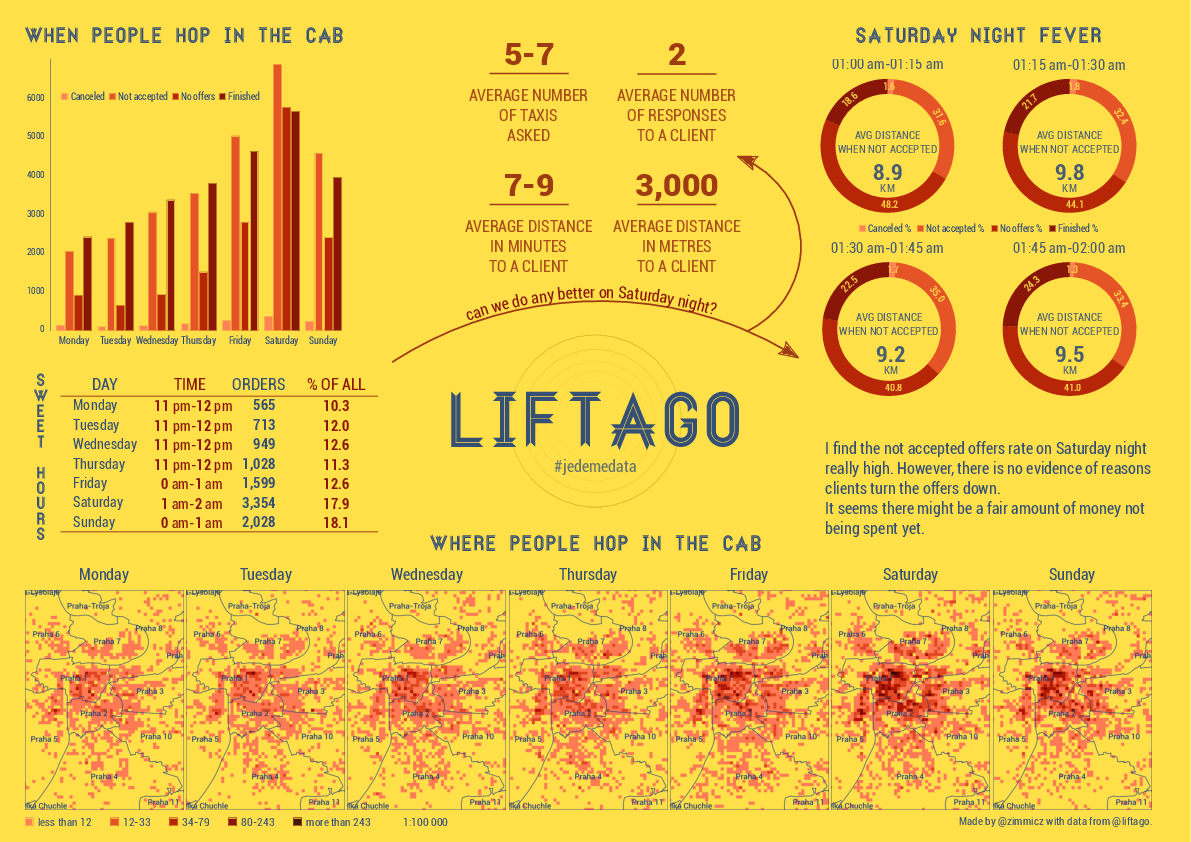

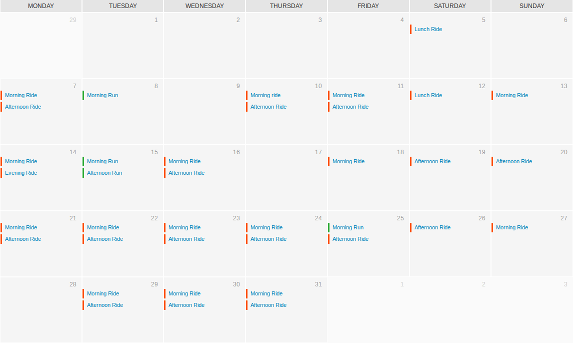

Figure: March Strava log.

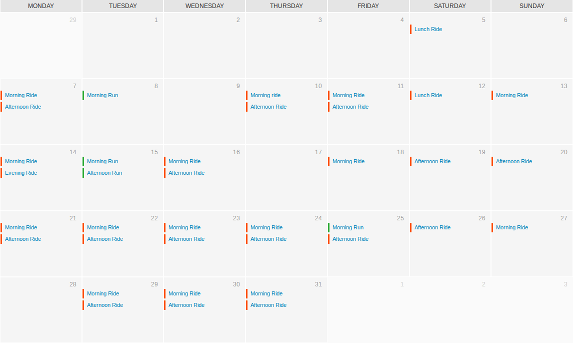

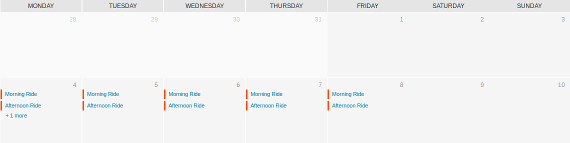

Figure: April Strava log.

What’s so great about commuting?

Not so long ago I considered commuting a waste of time. It took me 40-50 minutes to get to work and about the same to get back home. That’s 1-2 hours not being productive, not doing anything at all actually, just changing places.

That’s a terrible mistake to do. It’s much better to see this time as an opportunity to do that little extra for yourself - walk, run, ride. Even though it takes me a bit longer than public transport (showering and dressing included), it leaves me with totally different state of mind in the end - it just starts me up (hello Rolling Stones).

You can go for a ride right from work. That’s priceless.

As a by-product I started to care more about what I eat and when I eat it. I actually spend time cooking so I get enough food during the day. Something I didn’t do before, because you can always buy something sweet before the bus comes, right?

Figure: Daily commute in Brno: bike in pink, run in green. See the full version.

What’s not so great about commuting?

Weather, especially in spring and autumn, often sucks. Sometimes I come to work soaking wet, nothing a hot shower wouldn’t fix though. Someone still needs to clean the bike…

Traffic sucks in the evening. I get up before six, leave home before half past six, thus avoid heavy traffic. Riding a bike home in the evening is threatening sometimes and a bit of mutual respect between pedestrians, cyclists and drivers would do.

Cycling paths sometimes end right before the big crossroads. Often drivers use parts of the network as parking lanes, which puts you in danger suddenly.

Other cyclists, skaters, people walking their dogs, little kids usually don’t care about you at all. You better don’t get distracted if you want to get home safe and sound.

Books are hard to read on the bike.

Does it tell you something about your city?

I guess the city you see on foot or from atop a saddle is completely different than the one seen from a bus or a car.

Is it rather car or bike friendly? Do you feel at risk riding a bike or running? Is it faster to run/ride or drive? Does your city actually want you to leave your car at home at all, or has it been designed for cars?

River seems to be blessing when your city has one (unless flood strikes, different story). If done right, its shores might become one of the most beautiful parts of the city. Something Brno needs to catch up with other cities.

I hope one day I’ll get up and see Brno changing in front of me. Just like Paris is right now. We all die in the end, so why not to take a walk before we do?

Disclaimer: I’m an enthuastic developer, but I do not code for a living. I’m just the ordinary guy who keeps editing a wrong file wondering why the heck the changes are not being applied.

TL;DR: I do think npm might be the answer.

Wonderful world of JavaScript DevOps

When I first started using JavaScript on the server side with node.js, I felt overwhelmed by numerous options to automate tasks. There was npm taking care of backend dependencies. Then I would build a frontend and found out about bower for handling frontend dependencies. Then it would be great to have some kind of minification/obfuscation/uglification/you-name-it task. And the build task. And the build:prod task. And how about eslint task? And then I would end up spending hours doing nothing, just reading blogs about the tools being used by others who do code for a living.

Intermezzo: I think my coding is slow. Definitely slower than yours. I’m getting better though.

Using the force

Looking back I find it a bit stressful - how the heck do I choose the right tools? Where’s Yoda to help me out? Anyway, next to adopt after npm was bower. And I liked it, even though some packages were missing - but who cares as long as there is no better way, right? Except there is… I guess.

Automation was next in the line to tackle. So I chose gulp without a bit of hesitation. It was a hype, a bigger than grunt back then. I even heard of yeoman, but until now I still don’t know what it actually does. And I’m happy with that.

A short summary so far:

npm for backend dependenciesbower for frontend dependenciesgulp for running tasks

So far, so good.

Is Bower going to die?

Then I stumbled upon this tweet and started panicking. Or rather started to feel cheated. It took me time to set all this up and now it’s useless? Or what?

Seeing it now, I’m glad I read this. And I really don’t know what happened to Bower, if anything at all.

Keeping it simple

So Bower’s dying, what are you going to do about that? You’ll use npm instead! And you’ll have a single source of truth called package.json. You’l resolve all the dependencies with a single npm install command and feel like a king. We’re down to two now - npm and gulp.

Gulp, Gulp everywhere!

When you get rid of Bower, next feeling you have is your gulpfile.js just got off the leash. It got really big and grew to ~160 lines of code and became a nightmare to manage.

So you split it into task files and a config file. What a relief. But you still realize a half of your package.json dependencies starts with gulp-. And you hate it.

Webpack for the win

For me, a non-developer, setting the webpack wasn’t easy. I didn’t find docs very helpful either. Reading the website for the first time, I didn’t even understand what it should be used for. I got it working eventually. And I got rid of gulp, gulp-connect, gulp-less, gulp-nodemon, gulp-rename, gulp-replace, gulp-task-listing and gutil. And the whole gulpfile.js. That was a big win for me.

But how do you run tasks?

Well…

npm run start-dev # which in turn calls the code below

npm run start-webpack & NODE_ENV=development nodemon server.js # where start-webpack does the following

node_modules/webpack-dev-server/bin/webpack-dev-server.js --quiet --inline --hot --watch

That’s it. If I need to build code, I run npm run build, which calls some other tasks from scripts section in the package.json.

That’s pretty much it. I don’t think it’s a silver bullet, but I feel like I finally found peace of mind for my future JavaScript development. At least for a month or so before some other guy comes to town.

We have to deal with DGN drawings quite often at CleverMaps - heavily used for infrastructure projects (highways, roads, pipelines), they are a pure nightmare to the GIS person inside me. Right now, I’m only capable of converting it into a raster file and serve it with Geoserver. The transformation from DGN to PDF to PNG to Tiff is not something that makes me utterly happy though.

All you need to do the same is GDAL, ImageMagick, some PDF documents created out of DGN files - something MicroStation can help you with - and their upper left and lower right corner coordinates.

# I recommend putting some limits on ImageMagick - it tends to eat up all the resources and quit

export MAGICK_MEMORY_LIMIT=1512

export MAGICK_MAP_LIMIT=512

export MAGICK_AREA_LIMIT=1024

export MAGICK_FILES_LIMIT=512

export MAGICK_TMPDIR=/partition/large/enough

# I expect two files on the input: the first is PDF file with drawing, the second is a simple text file with four coordinates on a single line in the following order: upper left x, upper left y, lower right x, lower right y

INPUT=${1:?"PDF file path"}

COORDS=${2:?"Bounding box file path"}

OUTPUTDIRNAME=$(dirname $INPUT)

OUTPUTFILENAME=$(basename $INPUT | cut -d. -f1).png

OUTPUTPATH=$OUTPUTDIRNAME/$OUTPUTFILENAME

# create PNG image - I actually don't remember why it didn't work directly to Tiff

gdal_translate \

-co WORLDFILE=YES \

-co ZLEVEL=5 \

-of PNG \

--config GDAL_CACHEMAX 500 \

--config GDAL_PDF_DPI 300 \

-a_srs EPSG:5514 \ # Czech local CRS

-a_ullr $(echo $(cat $COORDS)) \ # read the file with coordinates

$INPUT \

$OUTPUTPATH

# convert to Tiff image

convert \

-define tiff:tile-geometry=256x256 \

-transparent white \ # drawings come with white background

$OUTPUTPATH \

${OUTPUTPATH/.png}_alpha.tif

# build overwies to speed things up

gdaladdo ${OUTPUTPATH/.png}_alpha.tif 2 4 8 16 32

And you’re done. The .wld file will be present for each resulting file. I rename it manually to match the name of a GeoTiff - that should be probably done automatically as well.

Using JOIN clause

All my GIS life I’ve been using a simple JOIN clause to find a row with an id = previous_id + 1. In other words, imagine a simple table with no indices:

CREATE TABLE test (id integer);

INSERT INTO test SELECT i FROM generate_series(1,10000000) i;

Let’s retrieve next row for each row in that table:

SELECT a.id, b.id

FROM test a

LEFT JOIN test b ON (a.id + 1 = b.id); -- note the LEFT JOIN is needed to get the last row as well

Execution plan looks like this:

Hash Join (cost=311087.17..953199.41 rows=10088363 width=8) (actual time=25440.770..79591.869 rows=10000000 loops=1)

Hash Cond: ((a.id + 1) = b.id)

-> Seq Scan on test a (cost=0.00..145574.63 rows=10088363 width=4) (actual time=0.588..10801.584 rows=10000001 loops=1)

-> Hash (cost=145574.63..145574.63 rows=10088363 width=4) (actual time=25415.282..25415.282 rows=10000001 loops=1)

Buckets: 16384 Batches: 128 Memory Usage: 2778kB

-> Seq Scan on test b (cost=0.00..145574.63 rows=10088363 width=4) (actual time=0.422..11356.108 rows=10000001 loops=1)

Planning time: 0.155 ms

Execution time: 90134.248 ms

If we add an index with CREATE INDEX ON test (id), the plan changes:

Merge Join (cost=0.87..669369.85 rows=9999844 width=8) (actual time=0.035..56219.294 rows=10000001 loops=1)

Merge Cond: (a.id = b.id)

-> Index Only Scan using test_id_idx on test a (cost=0.43..259686.10 rows=9999844 width=4) (actual time=0.015..11101.937 rows=10000001 loops=1)

Heap Fetches: 0

-> Index Only Scan using test_id_idx on test b (cost=0.43..259686.10 rows=9999844 width=4) (actual time=0.012..11827.895 rows=10000001 loops=1)

Heap Fetches: 0

Planning time: 0.244 ms

Execution time: 65973.421 ms

Not bad.

Using window function

Window functions are real fun. They’re great if you’re doing counts, sums or ranks by groups. And, to my surprise, they’re great in finding next rows as well.

With the same test table, we retrieve next row for each row with the following query:

SELECT id, lead(id) OVER (ORDER BY id)

FROM test.test;

How does that score without an index? Better than the JOIN clause.

WindowAgg (cost=1581246.90..1756294.50 rows=10002720 width=4) (actual time=28785.388..63819.071 rows=10000001 loops=1)

-> Sort (cost=1581246.90..1606253.70 rows=10002720 width=4) (actual time=28785.354..40117.899 rows=10000001 loops=1)

Sort Key: id

Sort Method: external merge Disk: 136848kB

-> Seq Scan on test (cost=0.00..144718.20 rows=10002720 width=4) (actual time=0.020..10797.961 rows=10000001 loops=1)

Planning time: 0.242 ms

Execution time: 73391.024 ms

And it works even better if indexed. It’s actually ~1,5× faster than the JOIN way.

WindowAgg (cost=0.43..409770.03 rows=10002720 width=4) (actual time=0.087..35647.815 rows=10000001 loops=1)

-> Index Only Scan using test_id_idx on test (cost=0.43..259729.23 rows=10002720 width=4) (actual time=0.059..11310.879 rows=10000001 loops=1)

Heap Fetches: 0

Planning time: 0.247 ms

Execution time: 45388.202 ms

It reads well and the purpose of such a query is pretty obvious.

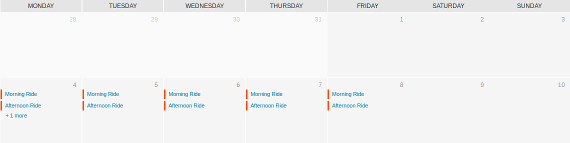

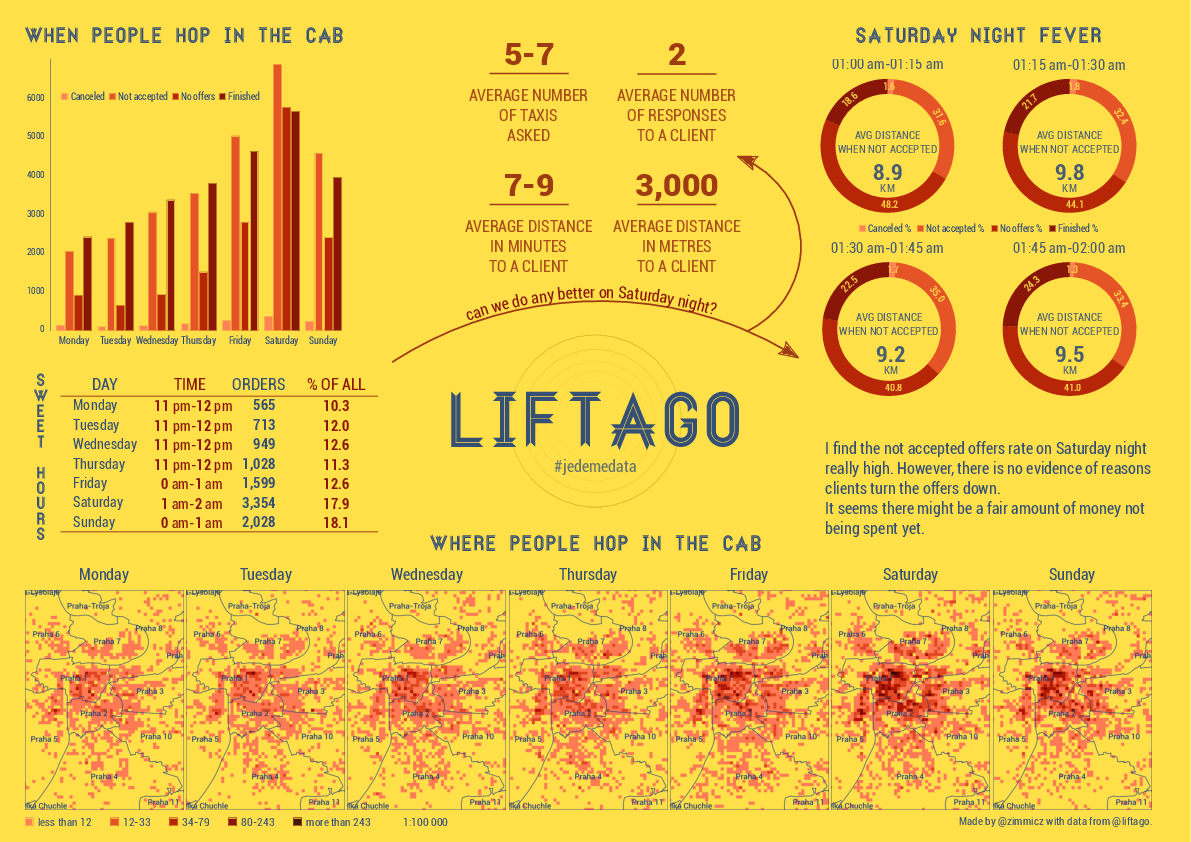

Liftago (the Czech analogy of Uber) has recently released a sample of its data covering four weeks of driver/pasenger interactions.

Have a look at my infographics created with PostGIS, Inkscape, Python and pygal.