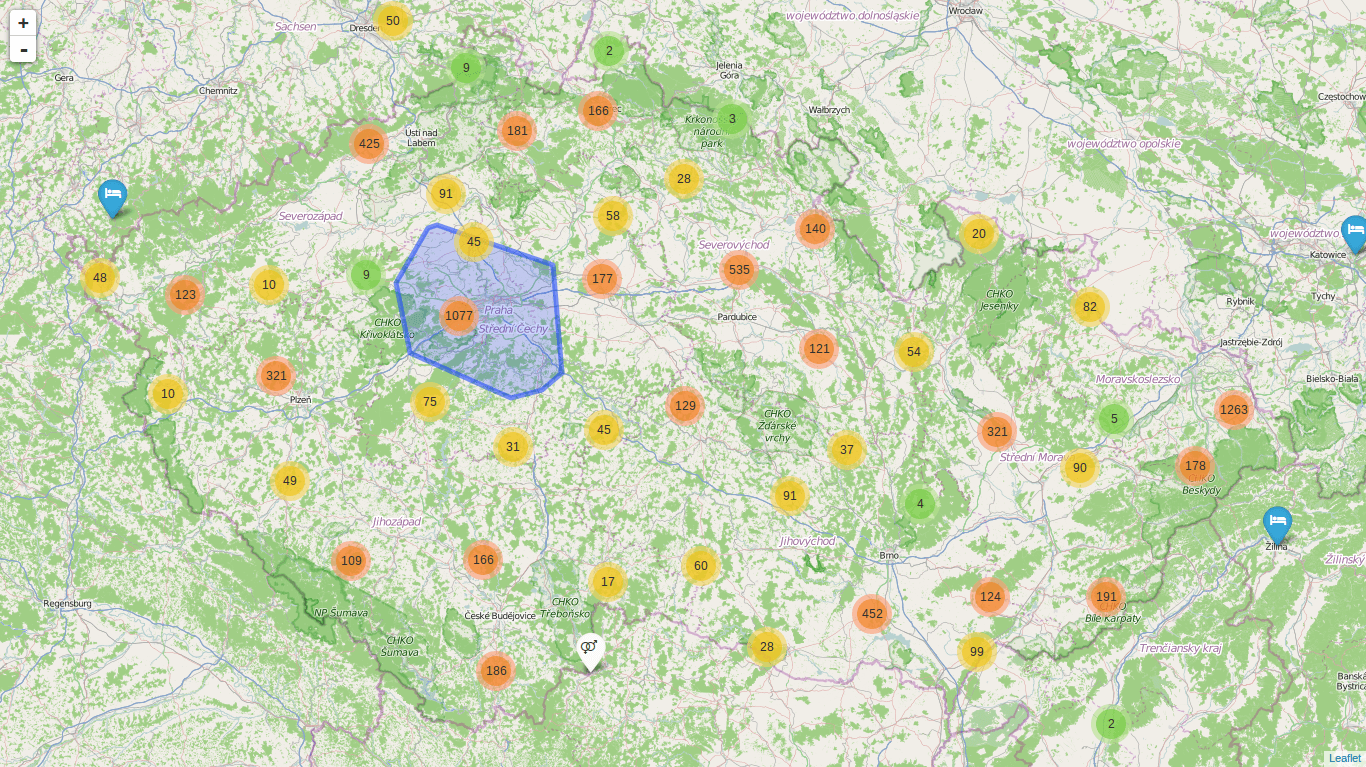

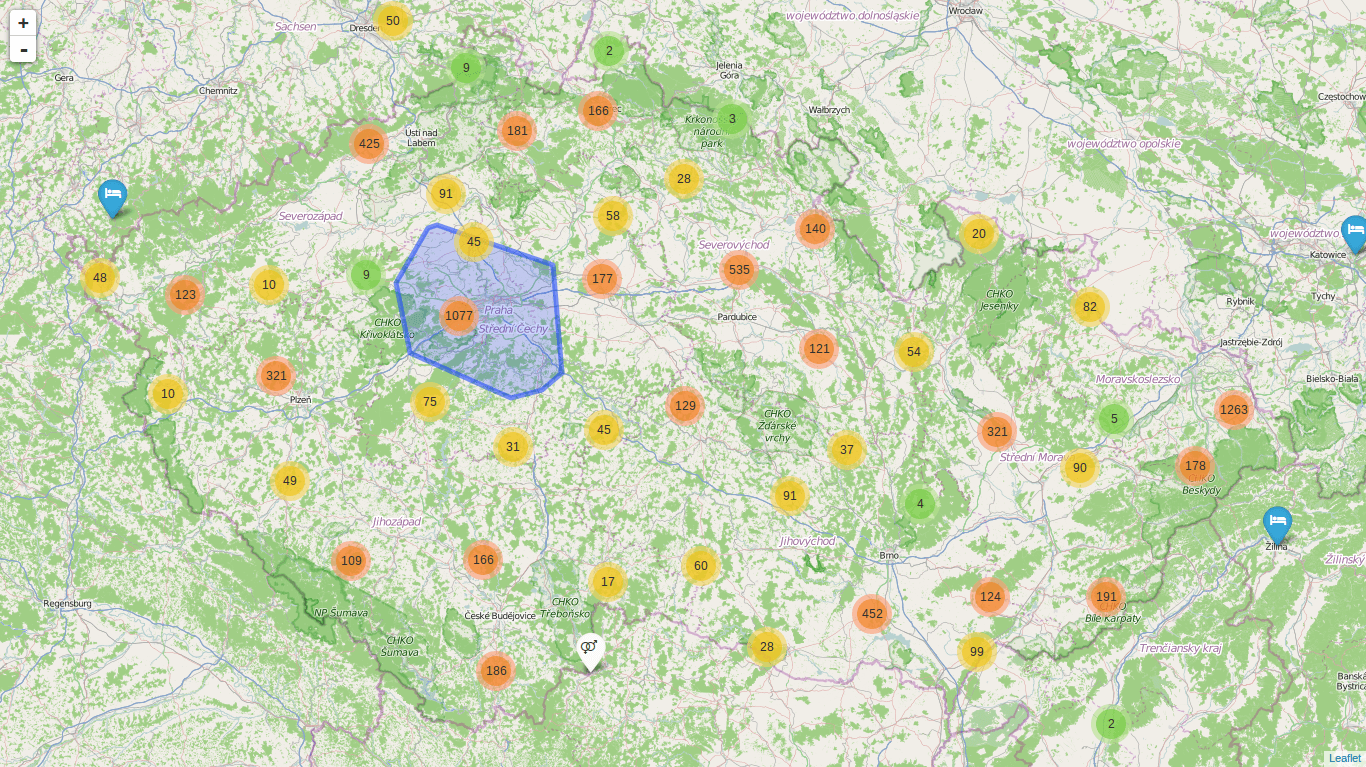

In the first part of my little case study I downloaded vozejkmap.cz dataset and imported it into the PostGIS database. Having spatial data safely stored the time comes to get it onto the map. Libraries used are:

I teach cartography visualization classes this semester and this map should serve well as an example of what can be done with online maps.

Retrieving data from the PostGIS database

Our goal is to build the whole map as a static HTML page without any backend logic. Thus, data needs to be extracted from the database into the format readable with Leaflet - GeoJSON.

That’s fairly easy with the postgresonline.com tutorial. It took me quite a time to find out what the following query does. Splitting it into smaller chunks helped a lot.

SELECT row_to_json(fc)

FROM (

SELECT 'FeatureCollection' AS type,

array_to_json(array_agg(f)) AS features

FROM (SELECT 'Feature' AS type,

ST_AsGeoJSON(lg.geom)::json As geometry,

row_to_json((SELECT l FROM (SELECT id, title, location_type, description, author_name, attr1, attr2, attr3) AS l

)) AS properties

FROM vozejkmap AS lg ) AS f ) AS fc \g /path/to/file.json;

To get all rows with type, geometry and properties columns (these are the ones defined in GeoJSON specification, see the link above), run this:

SELECT 'Feature' AS type,

ST_AsGeoJSON(lg.geom)::json As geometry,

row_to_json((SELECT l FROM (SELECT id, title, location_type, description, author_name, attr1, attr2, attr3) AS l

)) AS properties

FROM vozejkmap AS lg

array_agg() squashes all the rows into an array while array_to_json() returns the array as JSON.

SELECT 'FeatureCollection' AS type,

array_to_json(array_agg(f)) AS features

FROM (SELECT 'Feature' AS type,

ST_AsGeoJSON(lg.geom)::json As geometry,

row_to_json((SELECT l FROM (SELECT id, title, location_type, description, author_name, attr1, attr2, attr3) AS l

)) AS properties

FROM vozejkmap AS lg ) AS f

In the last step (the whole code as shown above) row_to_json returns the result as JSON.

Caveats

If you run this code from the psql console, be sure you

- set show only row to true with

\t

- set expanded output to false with

\x off

If you don’t, you’ll have lots of hyphens and column names saved to the json file.

Leaflet map

Map JavaScript is rather simple with ~30 lines of code (not taking styles into account). Thanks to the great plugins it is easy to show ~7,600 points on the map real quick.

I didn’t do much customization apart from styling markers and binding popups.

What’s next

- Turf which means I need to think of what could be fun to do with this data

- Layers switching

- Map key (by extending L.Control)

The code is still available at my GitHub.

We’ve rolled out completely new map GUI at edpp.cz built on top of OpenLayers 3. It looks great and has lots of functions both for BFU and power users. The only pitfall that came with moving away from OpenLayers 2 were remarkable differences in zoom levels between the old map and the new one.

Each of our maps is defined by our admins (center, zoom level, layers) at the map creation. Lots of links calling different views of map are created as well. They take form of http://edpp.cz/some-map?0=0&1=0...zoom=5. That zoom=<Number> started causing troubles immediately after the map switch. No way my workmates would update them one by one as there were ~4,500 of them. Sounds like a task for little bit of regular expressions and some SQL updates.

UPDATE table

SET column = regexp_replace(column, 'zoom=\d', 'zoom=' || subquery.zoom, 'g')

FROM (

SELECT regexp_replace(

substring(column from 'zoom=\d'),

'zoom=(\d)',

'\1',

'g')::integer + 2 AS zoom, guid

FROM table) AS subquery

WHERE column ~ 'zoom=\d'

AND table.guid = subquery.guid

That’s what I’ve come up with. It basically extracts the zoom level from the link, adds number two to its value and writes it back to the string.

VozejkMap.cz is a Czech open data iniatitive that collects data about wheelchair accessible places, e.g. pubs, toilets, cafes etc. As part of being open, they offer a JSON data download. JSON is a great text format, not so great spatial format (leaving GeoJSON aside) though. Anyway, nothing that PostGIS wouldn’t be able to take care of.

Let’s get some data

Using curl or wget, let’s download the JSON file:

wget -O /tmp/locations.json http://www.vozejkmap.cz/opendata/locations.json

We need to split them into rows to load each point into one row:

sed -i 's/\},{/\n},{/g' /tmp/locations.json

If you peep into the file, you’ll see lots of unicode characters we don’t want to have in our pretty little table. Here’s how we get rid of them:

echo -en "$(cat /tmp/locations.json)"

Let’s load the data

Let’s just be nice and leave the public schema clean.

CREATE SCHEMA vozejkmap;

SET search_path=vozejkmap, public;

Load the data:

CREATE TABLE vozejkmap_raw(id SERIAL PRIMARY KEY, raw text);

COPY vozejkmap_raw(raw) FROM '/tmp/locations.json' DELIMITERS '#' ESCAPE '\' CSV;

A few notes:

- I’m using

/tmp folder to avoid any permission-denied issues when opening the file from psql.

- By setting

DELIMITERS to # we tell PostgreSQL to load whole data into one column, because it is safe to assume there is no such character in our data.

ESCAPE needs to be set because there is one trailing quote in the dataset.

Let’s get dirty with spatial data

Great, now what? We loaded all the data into one column. That is not very useful, is it? How about splitting them into separate columns with this query? Shall we call it a split_part hell?

CREATE TABLE vozejkmap AS

SELECT

id,

trim(

split_part(

split_part(

raw, 'title:', 2

),

',location_type:', 1

)

) AS title,

trim(

split_part(

split_part(

raw, 'location_type:', 2

),

',description:', 1

)

)::integer AS location_type,

trim(

split_part(

split_part(

raw, 'description:', 2

),

',lat:', 1

)

) AS description,

cast( trim(

split_part(

split_part(

raw, 'lat:', 2

),

',lng:', 1

)

) AS double precision) AS lat,

cast( trim(

split_part(

split_part(

raw, 'lng:', 2

),

',attr1:', 1

)

) AS double precision) AS lng,

trim(

split_part(

split_part(

raw, 'attr1:', 2

),

',attr2:', 1

)

)::integer AS attr1,

trim(

split_part(

split_part(

raw, 'attr2:', 2

),

',attr3:', 1

)

) AS attr2,

trim(

split_part(

split_part(

raw, 'attr3:', 2

),

',author_name:', 1

)

) AS attr3,

trim(

split_part(

split_part(

raw, 'author_name:', 2

),

',}:', 1

)

) AS author_name

FROM vozejkmap_raw;

It just splits the JSON data and creates table out of it according to the VozejkMap.cz data specification. Before going on we should create a table with location types to join their numeric codes to real names:

CREATE TABLE location_type (

id integer PRIMARY KEY,

description varchar(255)

);

INSERT INTO location_type VALUES(1, 'Kultura');

INSERT INTO location_type VALUES(2, 'Sport');

INSERT INTO location_type VALUES(3, 'Instituce');

INSERT INTO location_type VALUES(4, 'Jídlo a pití');

INSERT INTO location_type VALUES(5, 'Ubytování');

INSERT INTO location_type VALUES(6, 'Lékaři, lékárny');

INSERT INTO location_type VALUES(7, 'Jiné');

INSERT INTO location_type VALUES(8, 'Doprava');

INSERT INTO location_type VALUES(9, 'Veřejné WC');

INSERT INTO location_type VALUES(10, 'Benzínka');

INSERT INTO location_type VALUES(11, 'Obchod');

INSERT INTO location_type VALUES(12, 'Banka, bankomat');

INSERT INTO location_type VALUES(13, 'Parkoviště');

INSERT INTO location_type VALUES(14, 'Prodejní a servisní místa Škoda Auto');

Let’s build some geometry column, constraints and indexes. And don’t forget to get rid of all the mess (the vozejkmap_raw table).

DROP TABLE vozejkmap_raw;

ALTER TABLE vozejkmap ADD PRIMARY KEY(id);

-- 4326 geometry is not very useful for measurements, I might get to that next time

ALTER TABLE vozejkmap ADD COLUMN geom geometry(point, 4326);

ALTER TABLE vozejkmap ADD CONSTRAINT loctype_fk FOREIGN KEY(location_type); REFERENCES location_type(id);

UPDATE vozejkmap SET geom = ST_SetSRID(ST_MakePoint(lng, lat), 4326);

And here we are, ready to use our spatial data!

Feel free to grab the code at GitHub.

PostGIS docs clearly states that:

> This function call will automatically include a bounding box comparison that will make use of any indexes that are available on the geometries.

That means (or at least I think so) that you shouldn’t bother with using operators before calling this function.

I was preparing my second lecture on PostGIS and I was experimenting a bit and came up with an interesting thing on this matter:

Let’s have two SQL relations, roads and regions. I would like to retrieve every road that intersects a certain region. Spatial indexes were built beforehand on both tables.

First try:

EXPLAIN ANALYZE SELECT roads.* FROM roads

JOIN regions ON ST_Intersects(roads.geom, regions.geom)

WHERE regions."NAZEV" = 'Jihomoravský';`

And here comes the result:

Nested Loop (cost=4.85..324.26 rows=249 width=214) (actual time=45.102..5101.472 rows=74253 loops=1)

-> Seq Scan on regions (cost=0.00..12.62 rows=1 width=32) (actual time=0.015..0.018 rows=1 loops=1)

Filter: (("NAZEV")::text = 'Jihomoravský'::text)

Rows Removed by Filter: 13

-> Bitmap Heap Scan on roads (cost=4.85..311.38 rows=25 width=214) (actual time=45.079..4931.495 rows=74253 loops=1)

Recheck Cond: (geom && regions.geom)

Rows Removed by Index Recheck: 154841

Filter: _st_intersects(geom, regions.geom)

Rows Removed by Filter: 71212

-> Bitmap Index Scan on roads_idx (cost=0.00..4.85 rows=75 width=0) (actual time=40.142..40.142 rows=145465 loops=1)

Index Cond: (geom && regions.geom)

Total runtime: 5181.459 ms

I was pretty satisfied with the result, I kept digging deeper though.

Second try:

EXPLAIN ANALYZE SELECT roads.* FROM roads

JOIN regions ON roads.geom && regions.geom

WHERE regions."NAZEV" = 'Jihomoravský' AND ST_Intersects(roads.geom, regions.geom);

And the result:

Nested Loop (cost=0.29..21.19 rows=1 width=214) (actual time=3.041..3850.302 rows=74253 loops=1)

-> Seq Scan on regions (cost=0.00..12.62 rows=1 width=32) (actual time=0.021..0.024 rows=1 loops=1)

Filter: (("NAZEV")::text = 'Jihomoravský'::text)

Rows Removed by Filter: 13

-> Index Scan using roads_idx on roads (cost=0.29..8.55 rows=1 width=214) (actual time=2.938..3681.432 rows=74253 loops=1)

Index Cond: ((geom && regions.geom) AND (geom && regions.geom))

Filter: _st_intersects(geom, regions.geom)

Rows Removed by Filter: 71212

Total runtime: 3930.270 ms

Now there’s a significant difference between total runtimes of both queries and - more important - also a difference between their query plans. The latter is like 20 % faster.

I’m puzzled about this behavior and would appreciate any thoughts on this. Reach me at Twitter, LinkedIn or e-mail (zimmicz[at]gmail.com).